Let's play safe and fair

When two hurricanes pummeled the south-eastern US in quick succession this autumn, the resulting power outages left millions of households and businesses without power – in some cases, for weeks on end. Unfortunately, problems like these are set to become more frequent in the coming years; and experience shows that usually the poorest communities are the worst affected.

This is an example of how systems failures are entwined with problems of social equity. When infrastructure breaks down, the ones who are hit hardest are also likely to be those with the least resources and support to help them cope. We need to increase the resilience of these systems to cope with the increase in extreme events as well as demand, because systems that fail affect different people differently. In increasing robustness, we can limit the damage to vulnerable populations, and so improve fairness.

This fairness problem has another aspect: resource shortages are often driven by individual overuse. That’s the infamous tragedy of the commons: when resources are publicly shared, the actions of self-interested individual users are likely to drive degradation that ultimately hurts everyone. This tension is behind everyday problems ranging from traffic congestion to overfishing. Of course, the most extreme example is climate change, with underdeveloped countries already taking the brunt of droughts and flooding although they have contributed the least to the emissions causing the problem.

But what does this have to do with automation?

First prioritise, then control

The unfair distribution of resources forces trade-offs and difficult decisions. Fairness is not a one-dimensional metric; academics across various disciplines have developed many fairness measures, applicable to varying cultural contexts and specific situations. This philosophical choice is not for engineers to make. Should we first establish a minimum of fairness, and then maximise the efficiency of the system? Or the other way round: is it better to maximise fairness with a set minimum efficiency? These questions go well beyond practicalities!

However, while policy makers are the ones who have to grapple with the big questions (such as who should be prioritised, and what minimum service level is acceptable, and how to balance that minimum service for individuals against reliable provision for everyone), engineers – specifically, control theorists – have a role to play. And although this role is largely invisible, it has a major real-life impact.

Our shared infrastructure systems are massive and need to be adjusted on a second-by-second basis, so we really need algorithms to manage them. Now, what if we could use automation to make the system fairer?

Control is at work in everything from automatic traffic routing to energy distribution in demand response schemes, automatically controlling groundwater supply to farmers. In all these systems, mathematics can be put to work to make the outcomes more equitable.

One of the fields addressing such questions is game theory, which originates in economics and deals with conflict and cooperation between self-interested users accessing shared resources. Within automation, game theory is introduced with the aim of ensuring an effective outcome that is fair to all users.

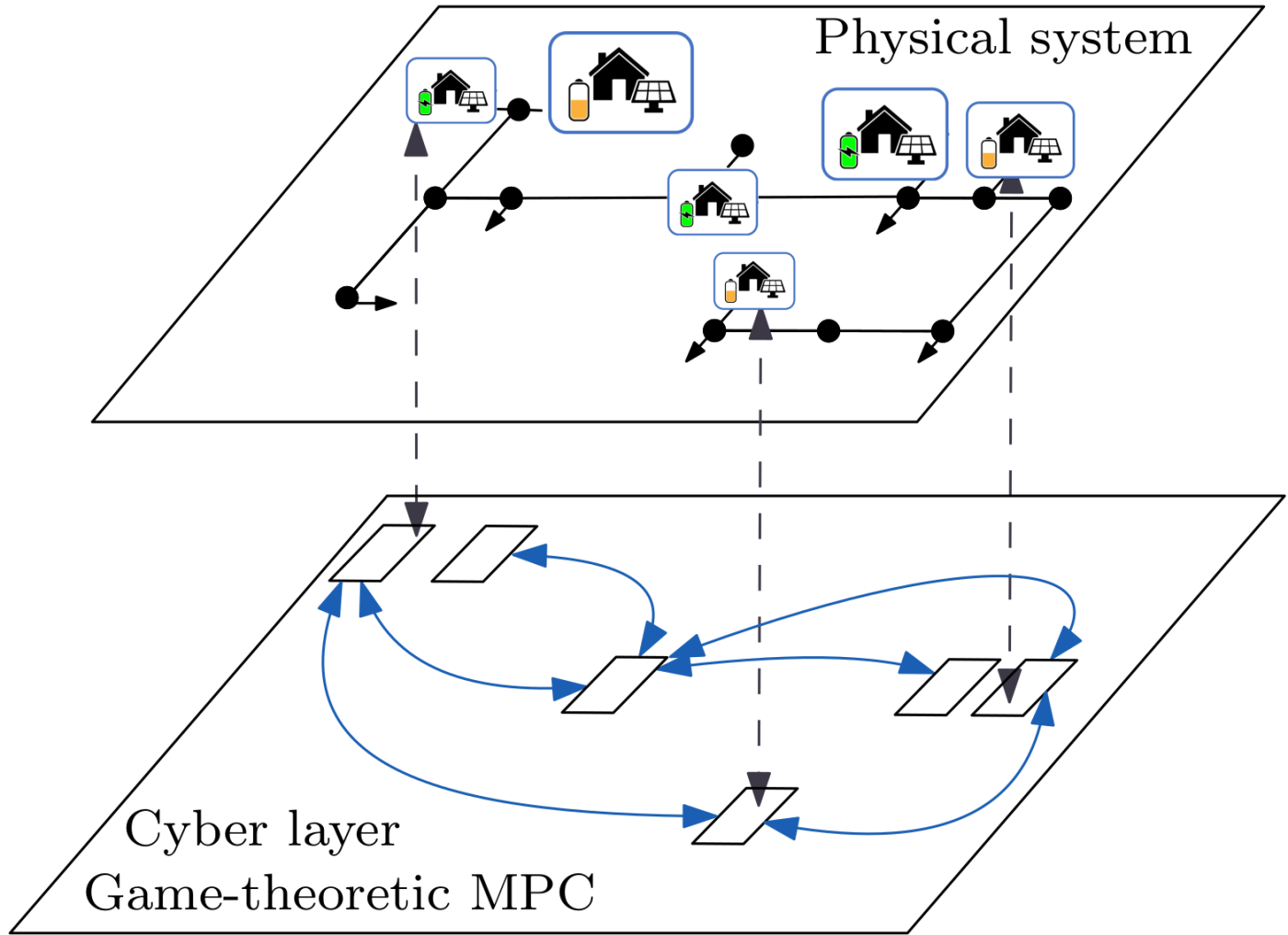

Model predictive control, a well-established control methodology for complex systems, adds the system constraints: what is possible from the infrastructure side, and what limits must be respected while incorporating information on future states of the system into real-time decision making.

Combining MPC with game theory can ensure efficient system operation while fulfilling pre-defined societal goals such as equitable distribution and fairness measures within an automated system. However, one major step is missing before we can use the algorithm in real life – rigorous safety and robustness to avoid system failures. For example, how will safety and resilience be affected by disturbances to the system (such as measurement errors or failures in communication channels)? Or by incorrect predictions (weather forecasts, resource availability etc), or by individual users joining or leaving the scheme, or by their preferences?

I am working to fill that theoretical gap, trying in particular to identify design paradigms that ensure stable operation, and consistent outcomes if they’re installed in a real system and run without supervision.

Many possible ways to manage resources better

Finding a path to more sustainable resource usage doesn’t have to involve game-theoretic MPC. In a recent project with Google, for instance, we worked to maximise the amounts of computational load performed with “green” energy – and in another we worked with tiko Energy to develop controllers for energy management in smart homes. Both of these show the power of individual organisations to reduce carbon emissions: in one case, addressing automated job allocation within a company; in the other, creating systems to reduce peak energy consumption, and increase the use of renewable energy sources, across an entire neighbourhood.

Although these projects revolved around energy solutions, that isn’t the focus of my research. It’s much more about creating tools that can be applied in any complex, large-scale system with limited resources. Especially in industrialised countries, we are used to the comfortable world of infinite resource availability, but our changing climate and unstable geopolitics show that this model is an illusion. We need to think about how to distribute these resources that we have. Who gets how much, when – and why? And how can we address the racial and class inequities at work in resource allocation?

If we want to ensure that our shared infrastructure supports social fairness and equitable opportunities, building robust safety into the design of automated solutions is an urgent need. It’s exciting to see how automation can be part of the solution to that challenge.