Decision-making in a data-driven world

Imagine no longer having to drive through rush-hour traffic on your way to work. No longer having to let road works spoil your holiday trip, or having to deal with terrible road users. Instead, you could relax and answer emails in a flying taxi or devote yourself to your loved ones in a self-driving family car. And all this without having to adhere to public transport timetables. Sounds like science fiction?

It could become a reality sooner than you think.

Better safe than sorry

Artificial intelligence (AI) has long promised to unlock this vision using clever algorithms, supercomputers, and the vast amounts of data that are at our disposal nowadays. But why are we not there yet? In one word: safety. You would never hop in a car which is not certifiably safe.

Although even current automated systems can already match or even outperform humans at certain tasks, failures can still happen. Sometimes with catastrophic consequences, as for example Tesla crashes demonstrated. What is worse, is that the cause of such errors is not always explainable for engineers.

The design of autonomous systems crucially relies on models — consider autopilot systems, for example: Their design depends on accurate models of how an airplane works.

Models are essential to design and build airplanes, train pilots in simulators, minimize the cost of operations — such as minimizing fuel consumption. They are also important for inferring hidden (non-measured) yet crucial system states, as for example the attitude of the plane based on gyroscopes, and models are also what allow autopilots to perform well in the face of uncertainty and unforeseen events — such as avoiding unexpected obstacles.

Models are therefore so important, because they allow us to simulate reality and plan future actions.

Back to the future

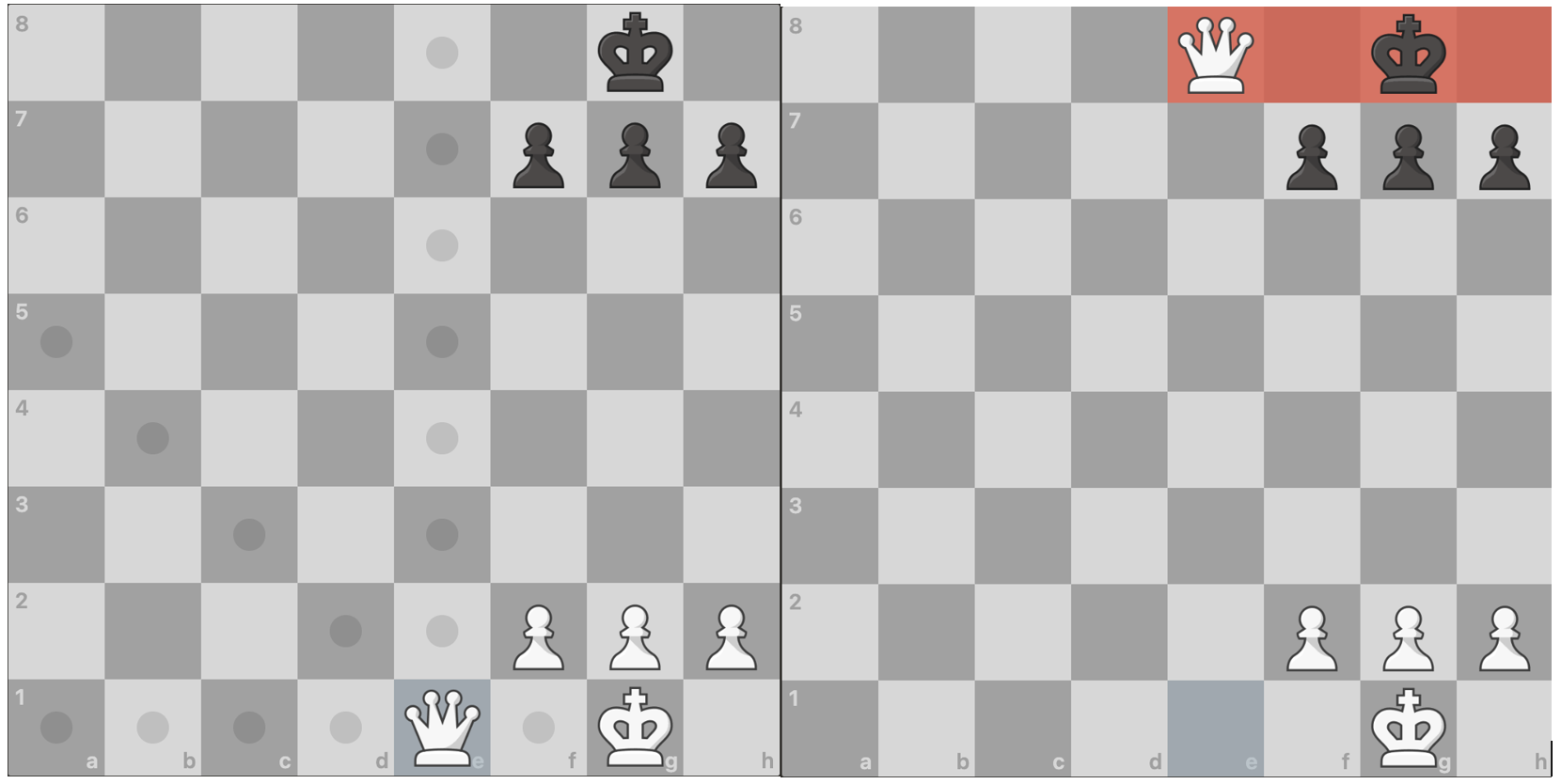

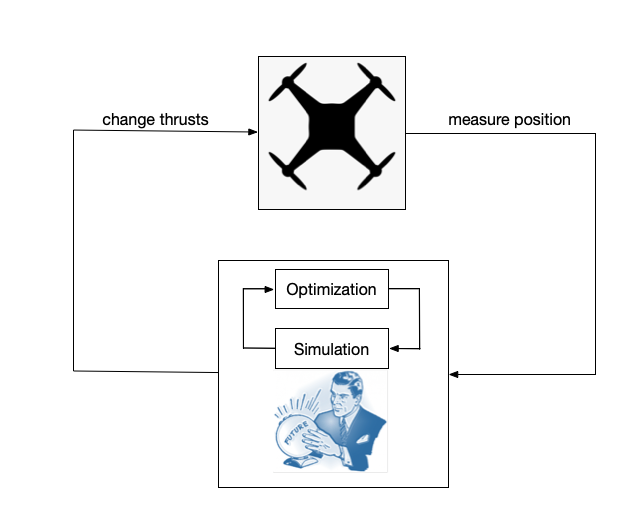

The ability to simulate and act on the future is the essence of Model Predictive Control (MPC), an incredibly powerful approach developed in control theory. Put simply, an MPC algorithm uses (dynamical system) models (like the equations that describe how a quadcopter flies) to simulate the near future and make decisions based on the outcome of the simulation. As an analogy, think about a chess game. If you want to move your Queen around, you can only move in certain ways. The best move is chosen based on your ability to think about future moves.

Similarly, an MPC algorithm comes up with a plan based on a simulation of the near future and executes one step of that plan. Based on the observations after this step, the algorithm goes back to the simulation and re-evaluates: Is the plan still the right one? Or is there a better variant based on the new situation?

By sensing, actuating, and optimizing real-time, the MPC algorithm closes a feedback loop with reality.

One caveat remains, however: the further into the future the model predicts, the less reliable it becomes. Again, think about chess. You can plan many moves into the future, but if you plan too far into the future, your opponent will inevitably play an unexpected move. Makes sense, right? The feedback loop allows an MPC algorithm to connect the actual impact of its action with what it previously simulated. This way, it can correct for mismatches between the model and reality as well as other unexpected errors.

The dance between simulation and feedback actions allows MPC algorithms to do pretty amazing things. Of course, however, there’s no such thing as a free lunch. Sometimes MPC algorithms can fail miserably. Often, this depends on the quality of the models. Think about a complex humanoid robot whose goal is to run across obstacles. Finding a model for this task is very time-consuming, expensive, and depends on expert knowledge that may not be available.

These drawbacks are not unique to this particular task. In fact, finding a model almost always requires a great deal of data along with specialized algorithms and expert domain knowledge.

Swimming in the data deluge

An alternative approach is to entirely bypass dynamical system models and learn how to make decisions directly from data. This data-driven approach is favored in many application domains, powered by advances in machine learning, and promises exciting developments such as the so-called pixels-to-control paradigm enabling end-to-end automation in autonomous driving.

The truth is, however, that to date, neither the AI nor the control research communities know how to design data-driven controllers that perform reliably in safety-critical and real-time environments.

So it seems like we are at an impasse. We know how to make decisions when we have dynamical system models, but such models are sometimes hard to get.

Conversely, making decisions directly from data is hard, and requires a significant amount of time and computational power, even for very simple tasks. A considerable world-wide research thrust called “data-driven control” explores such questions.

DeePC: model free MPC

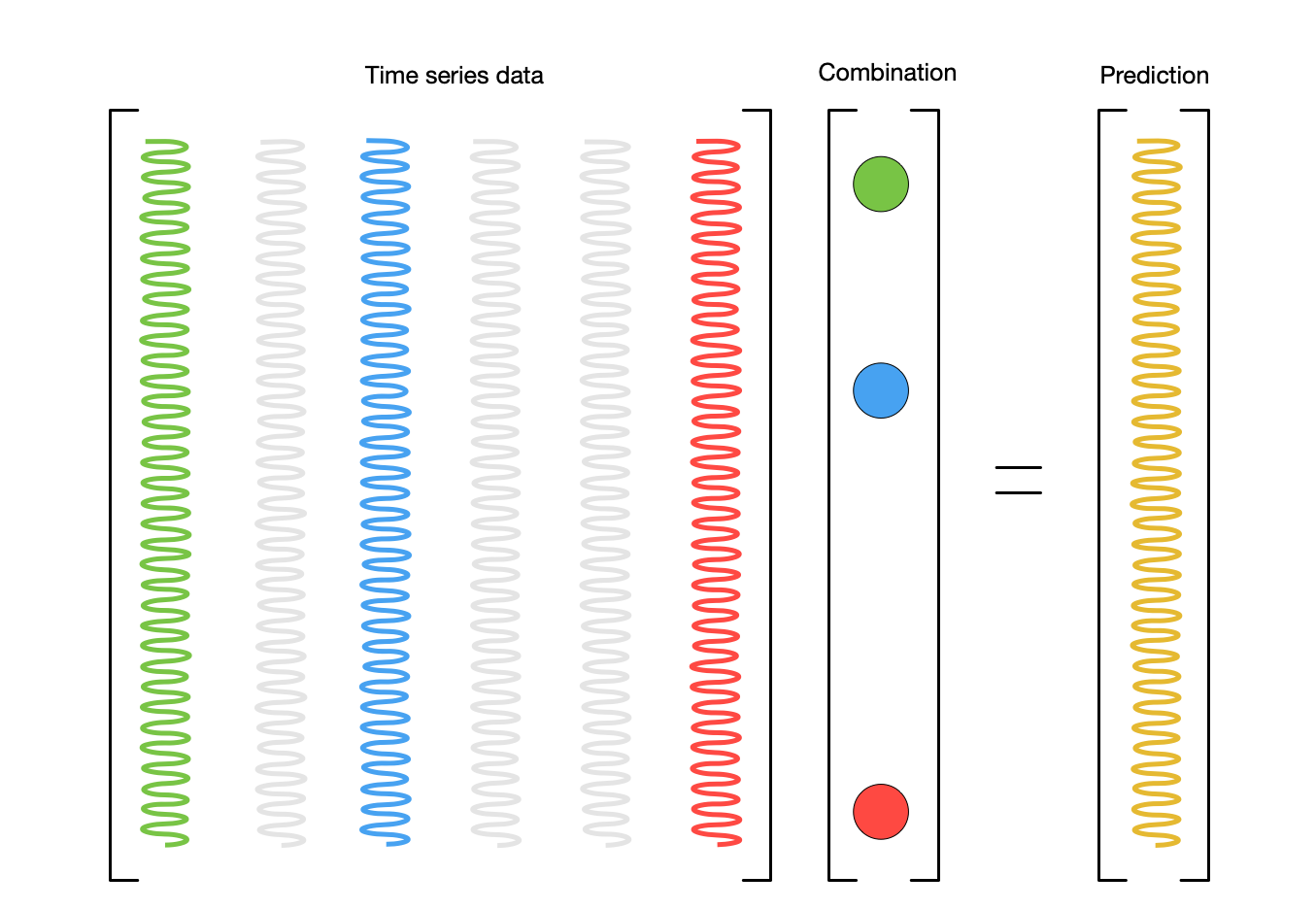

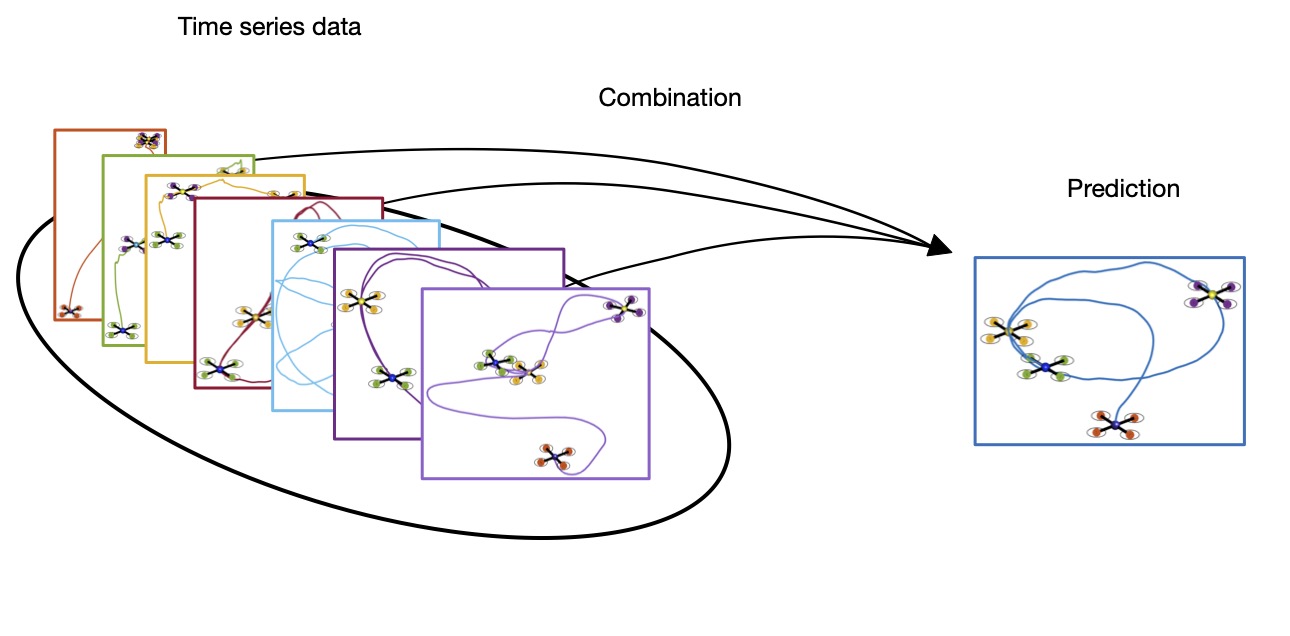

Our solution to this chicken-and-egg problem is simple. Perhaps unsurprisingly, we would like to take the best of both worlds. Here is how we go about it. The first idea is to replace dynamical system models with raw data matrices of stacked time series measurements.

Consider the columns of the matrix to represent different valid behaviors. In the case of a quadcopter, different valid behaviors could be different flight trajectories. Columns of the data matrix are thus constructed, for example, by measuring the position and velocity at different time instants.

Under reasonable assumptions, any combination of the columns of the data matrix is guaranteed to be another valid behavior. In other words, raw data matrices can be thought of as predictive models.

The second idea is to then feed a simulated behavior into an MPC scheme. Much like what we have seen above, but with the purely data-driven predictive models.

Putting all these pieces together results in a data-driven MPC algorithm, which we call Data-Enabled-Predictive-Control (DeePC). Under reasonable assumptions, DeePC is not only guaranteed to match the performance of traditional MPC algorithms, but it also offers several extra benefits. Indeed, you do not need the expert knowledge that is usually required to find a model. Anyone with access to enough data already has a model for free — without needing to do any of the hard work! And DeePC is incredibly easy to implement.

Over the past few years, we developed DeePC and tested its capabilities on a number of case studies. Among others examples, on power systems, quadcopters, autonomous walking excavators, and traffic. Here’s how DeePC performs in controlling the motion of a quadcopter and even a 12-ton excavator:

|

|

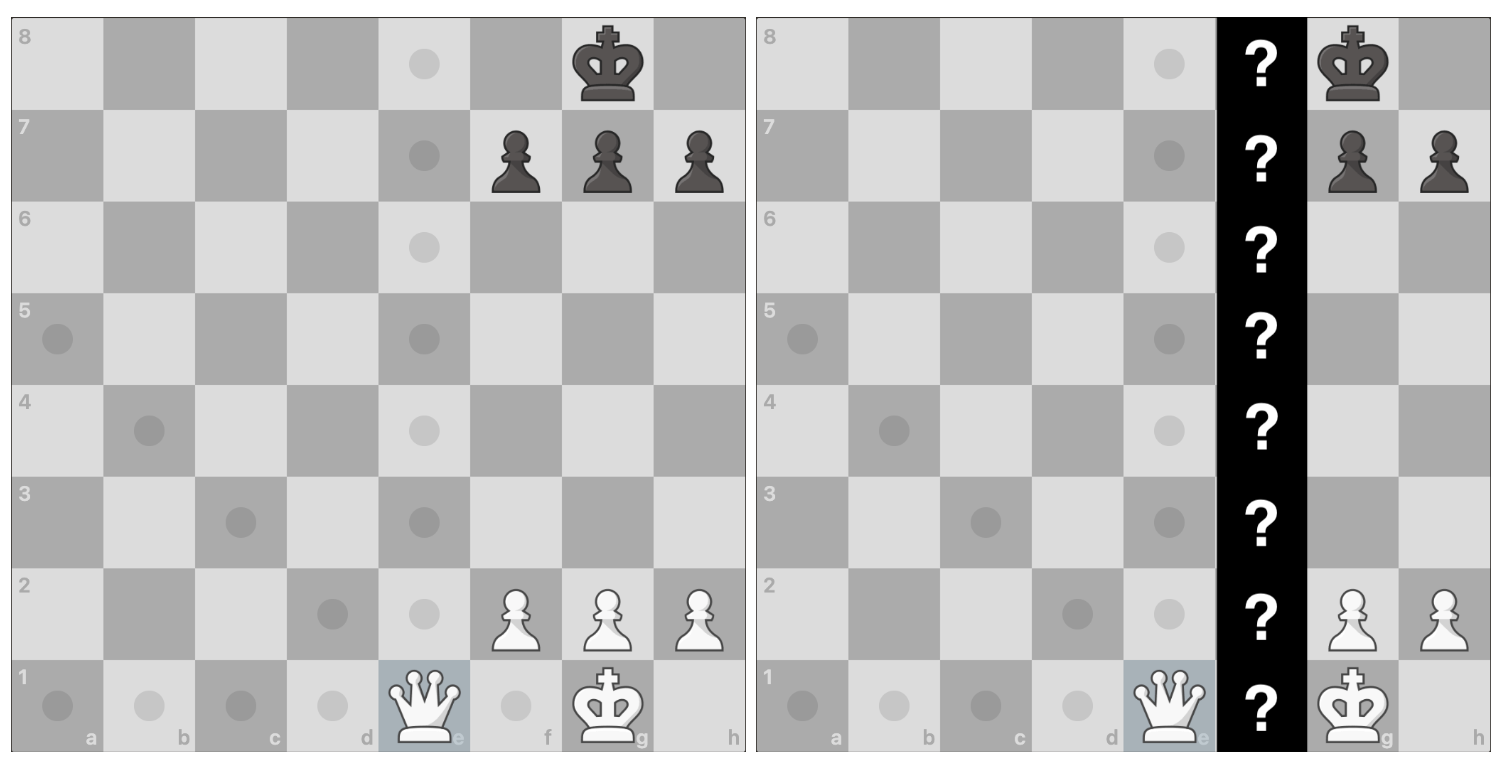

While we are very excited about these promising results, our job is not done yet. Controlling the motion of a quadcopter is very different from flying people around with an autonomous taxi. Obviously, quality, safety, and reliability are an absolute must; but is it possible to provide such guarantees when the environment is uncertain or even unknown? To understand why, think of game of chess again: Selecting the best move may no longer be easy — or even possible — if part of the board is unknown.

Of course, we can never be 100% sure in our decision making when the information is uncertain. Yet, sometimes decisions need to be made even in adverse circumstances. For example, the cameras of an autonomous car may struggle to identify an object (or, worse, a person). However, our algorithms need to make safe and reliable decisions in real-time, even in such situations. A pressing open problem is thus to find reasonable ways to quantify uncertainty, which can be leveraged in smart decision making. Our latest paper takes a first step in this direction, building on the data-driven approach described above.

Do you want to find out more about this line of research? Check out our webpages (linked here, here and here) and stay tuned. ;)