Safety by design: What controllers do

In our last blog post, we dug into the different aspects of safety that control engineers must consider – from the psychological safety of knowing that a system will work as expected, to the physical safety of preventing harm. We saw that different specifications sometimes come into conflict, and need to be prioritised; and that it’s important to ensure the outcome isn’t just barely ok, but is safe enough. So how can we perform control to make sure these safety constraints are respected?

Of course, there is no one answer. Control researchers work with a host of possible tools that can be applied to different problems. When it comes to controlling real-world systems, two of the dominant approaches are Proportional-Integral-Derivative (PID) control and Model Predictive Control (MPC). Each of these has different strengths.

How controllers make decisions

PID control schemes for tracking a desired setpoint, such as temperature, are based on comparing the present error (proportional), past error (integral), and rate of change of error (derivative). For example, SBB uses PID controllers to control the climate within trains by measuring the difference between actual and desired temperatures.

Tuning the weights of the proportional, integral, and derivative gains within the calculation can lead to different behaviours; there may be an overshoot, or more or less time may be needed to reach the setpoint, and so on. In some cases, proper tuning can help to ensure that safety requirements are satisfied, but PID control schemes typically can not guarantee either safety or performance.

In contrast to this weighted sum approach, MPC solves a sequence of optimisation problems in order to find a control policy. Each task involves finding a planned sequence of inputs that minimises a projected control cost, so you find the best solution to one problem before you move on to the next; with each step you know a bit better what your inputs should be. This process is repeated continuously, constantly adapting to new information.

MPC excels at optimising within set constraints, which is why NCCR Automation researcher Ahmed Aboudonia is working on adding an MPC controller to SBB’s PID control system to improve efficiency and save energy while ensuring passenger comfort on Swiss trains. It’s also why MPC is useful for safety problems. Fatal and non-fatal safety specifications can be added directly to the constraints guiding MPC optimization problems – either as a hard constraint (that is, the projected trajectory in the future must satisfy the desired specifications), or as a cost (it would be best if these constraints were satisfied, but it is acceptable to occasionally violate them).

A core property of MPC is the notion of “recursive feasibility”. This means that if a system is predicted to be safe up to the given time horizon (say, 30 seconds into the future), then it will continue to be safe over the course of the entire execution (maybe 4,000 seconds). More specifically, applying the control input generated by MPC at the current time ensures that it will be possible to solve the optimization problem of finding a control input at the next time. This is crucial when designing using MPC.

What about uncertainty?

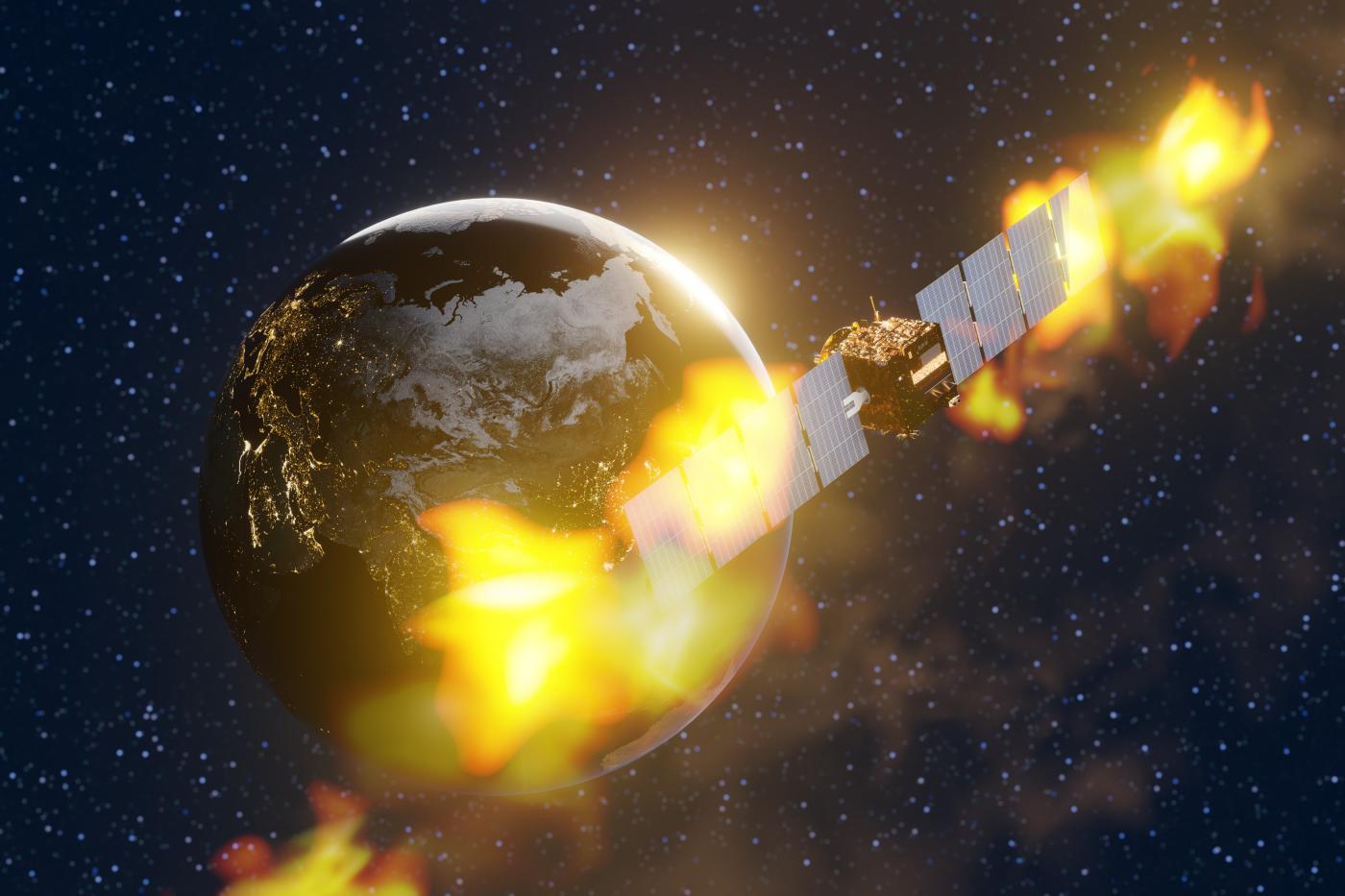

Once the controller is designed, with safety specifications factored in, it will usually be tested in a simulation before being deployed on the real system. However, a controller that performs well within a simulation may not guarantee safety when applied in the real world. This “Sim2Real” gap could have any number of causes: sensor noise, uncalibrated cameras, gusts of wind, unexpected user behaviour, faulty components, or even cosmic rays flipping bits in a spacecraft’s computer.

So control systems need laws that can remain safe despite such uncertainties. Engineers can draw on two main methodologies to deal with uncertainty: robust or stochastic control. A system is robustly safe if it remains safe for every possible value of the uncertain quantity: for instance, if the noise on a wire will always lie within +-0.1 volts, or the wind speed hitting a drone is always within 10m/s. A system is stochastically safe if it obeys the safety constraint with high probability. Controllers that obey stochastic safety constraints are generally less conservative than controllers obeying robust safety constraints (that is, they may have a higher tolerance for cost, risk etc in pursuit of better performance), because a robust controller always needs to respond to the worst-case disturbance.

Stochastic constraints can be further split into individual chance constraints or joint chance constraints. Individual chance constraints ensure safety (up to the defined probability) separately at each point in time. In contrast, a joint chance constraint returns a probability of staying safe over the course of the entire trajectory. For example, a control policy for an aeroplane could be chosen such that the probability of the plane landing safely at its destination and respecting all constraints over the course of its flight) exceeds 99.9999%. Joint chance constraints are more appropriate safety specifications in practice than individual chance constraints, but require more computational effort and technical care to solve.

Robust (hard) constraints Stay safe for any possible value of the uncertain quantity Individual chance constraints Stay safe at every separate point in time with some probability Joint chance constraints Stay safe across the entire trajectory with some probability Distributional robustness Stay safe for all consistent noise processes with some probability |

A bridge between robust and stochastic safety is distributionally robust safety. Distributionally robust methods provide stochastic safety against all noise processes sufficiently close to a reference distribution (which is typically based on observed data) – that is, actual events are used to derive a range of probable noise that defines the “robust” safety constraints. Since it is theoretically possible that this range could be exceeded, all in all the safety constraints must be called stochastic, but within the probable range, robust constraints are obeyed with high probability. Distributionally robust safety has applications in the operation of wind turbines and solar farms, in which historical data for wind and cloud-cover trends can be used to define likely noise sources in the future.

MPC schemes can find controllers that ensure safety under all these uncertainty conditions – robust, stochastic, and distributionally robust uncertainty (so long as recursive feasibility holds).

Safety you can rely on

As we have discussed, what “safety” means depends highly on the specific application. We have also explored methods to define, quantify, and control for safety in real-world systems. And we have considered the fact that safety must be prioritised at every level, with management and policy decisions shaping the process from the very first steps (defining norms, assessing risks and so on) to the final, ongoing safety audits.

Control can contribute to ensuring safety throughout this entire lifecycle. Ideally, the goal of control design should be verified safety that users can rely on without thinking… as unquestioned as flipping a light switch.