Going back to what we know: Injecting physical insights into neural networks

Neural networks (NNs) – a kind of artificial intelligence – can beat humans in Go, predict the 3D structure of proteins, or convincingly answer complex questions. And yet, despite these great successes, NNs can also fail quite spectacularly because of the shortcut learning phenomenon. They sometimes manage to solve the task in an unexpected way; for example, differentiating between dogs and cats based on the presence or absence of a leash. While this does indeed work, to some extent, it doesn’t conform to our human intuition. We know that’s not the real difference between cats and dogs, and it won’t always work.

Now, the issue is that NNs won’t give you any feedback on what they’re doing – there is no general ways for humans to detect failures of NNs, which makes them uninterpretable in practice. And allowing such a shortcut to pass could mean bigger errors turn up later on. If, say, the NN were tasked with advising on medical care of the identified animal, it would be quite important to know reliably which animal was which. And the same kind of weakness causes risks to humans when NNs are deployed to model or control (potentially safety-critical) physical systems such as building heating systems, making it an urgent problem to fix.

So why does this happen – and how do we prevent such failures? Let’s start at the beginning.

A neural network, what’s that?

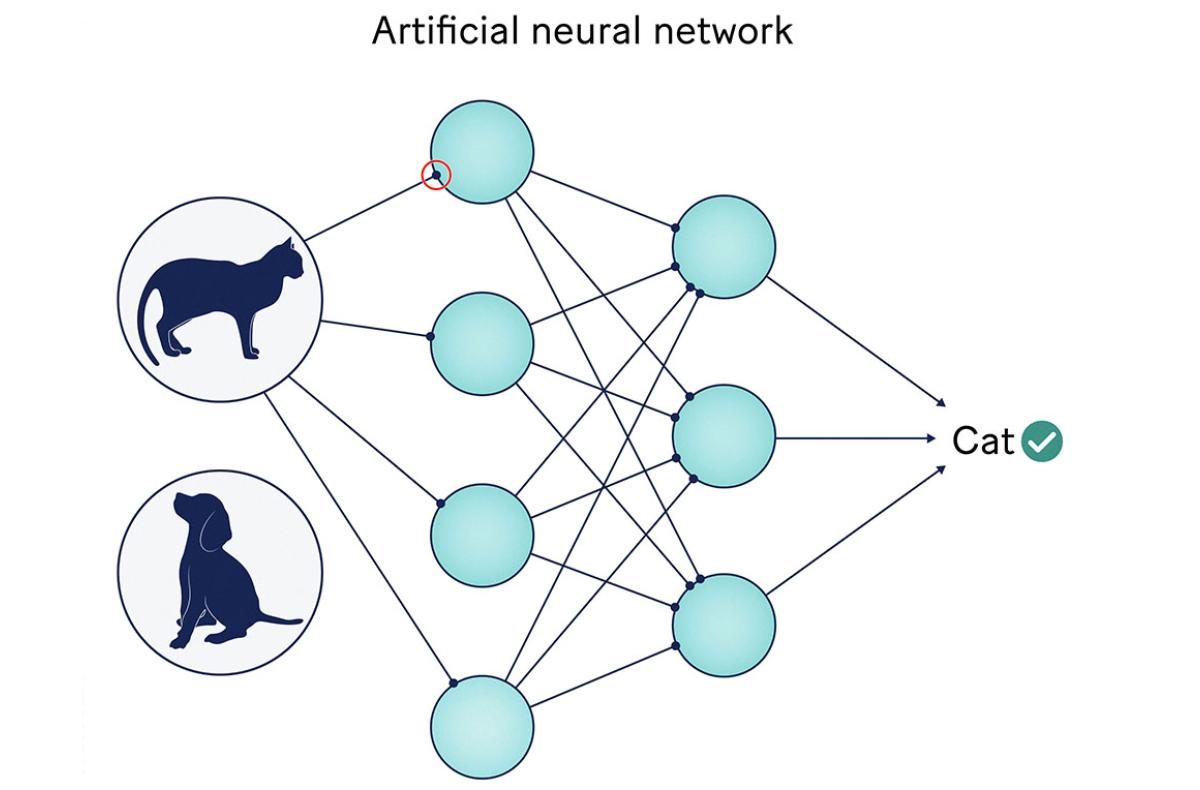

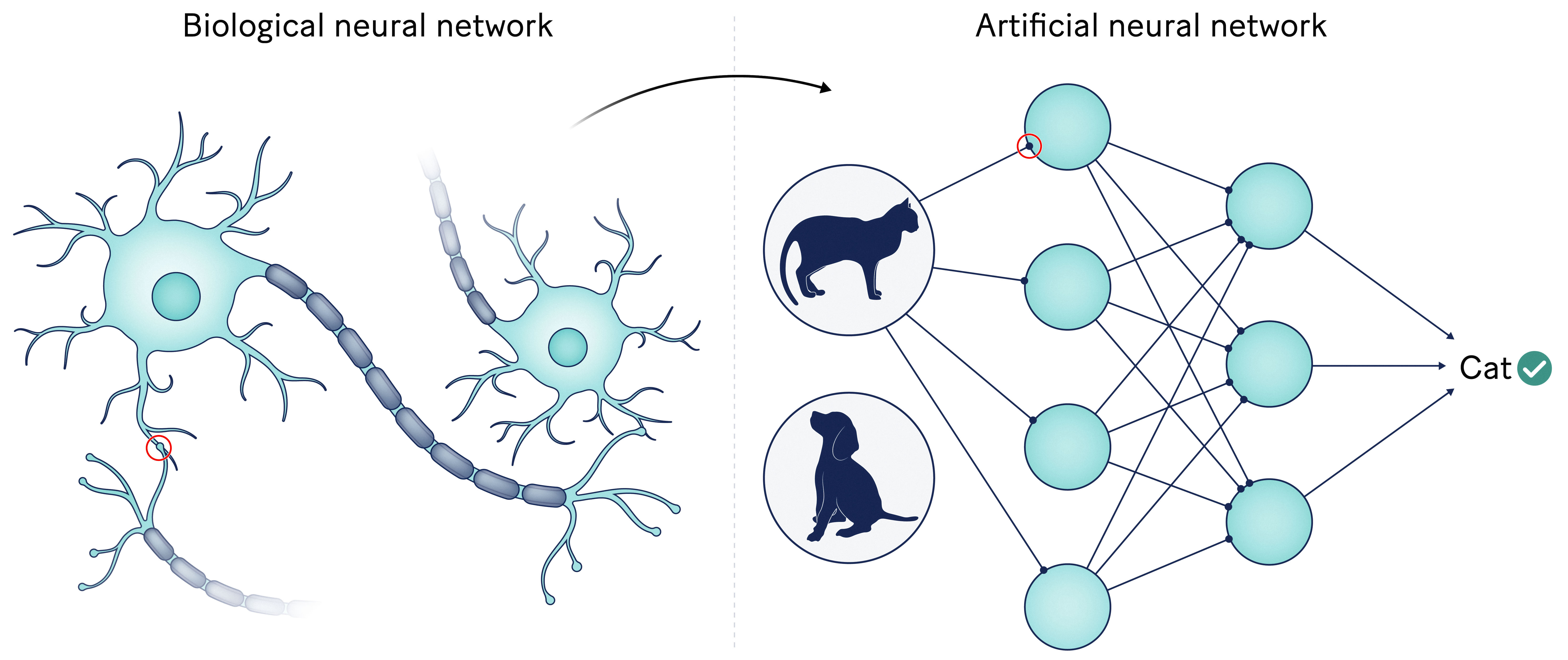

NN design aims to replicate the human brain. As such, NNs are composed of a bunch of neurons (typically organized in layers) and connections (analogous to synapses in the human brain). Of course, the neurons here are mathematical functions rather than nerves… but that’s the idea. An NN takes some data (such as an image) as input and feeds it through the network. Each neuron aggregates and transforms the data it receives from other neurons before sending it to the next ones. At the end of the process, the NN outputs a result (such as “cat” or “dog”).

Here comes the magic of NNs, and why they are used everywhere: we can train them to achieve a given objective (here, differentiating between cats and dogs)! In other words, we know how to tweak the neurons and connections of NNs until they perform the wanted task. Quite neat, right?

This brain-inspired complex networked structure is simultaneously the main strength and the pitfall of NNs: it allows them to achieve superhuman performance on many tasks, but makes them essentially impossible to understand or even interpret. After all, we don’t really understand how our own brains behave either. That’s why NNs are often referred to as black-box models: when you work with NNs, you indeed feed them some input and observe the output – without really knowing what happens in the middle!

We don’t understand why NNs work, but why care?

This is indeed a good question: if NNs do work, why would we care about why or how?

Well, since we don’t know why they work, this also means we have no idea why or – maybe more critically – when they will fail. And they most likely will.

While wrongly classifying a cat as a dog might not matter, it can quickly be unethical or dangerous when humans are affected. You wouldn’t want to miss a job opportunity simply because some NN mistakenly decided your resume wasn’t good enough. And that’s before you even consider problems like autonomous car crashes…

The thing is, interactions with humans are unavoidable in a lot of applications – especially when working with physical systems. We need solutions to control what neural networks do in practice.

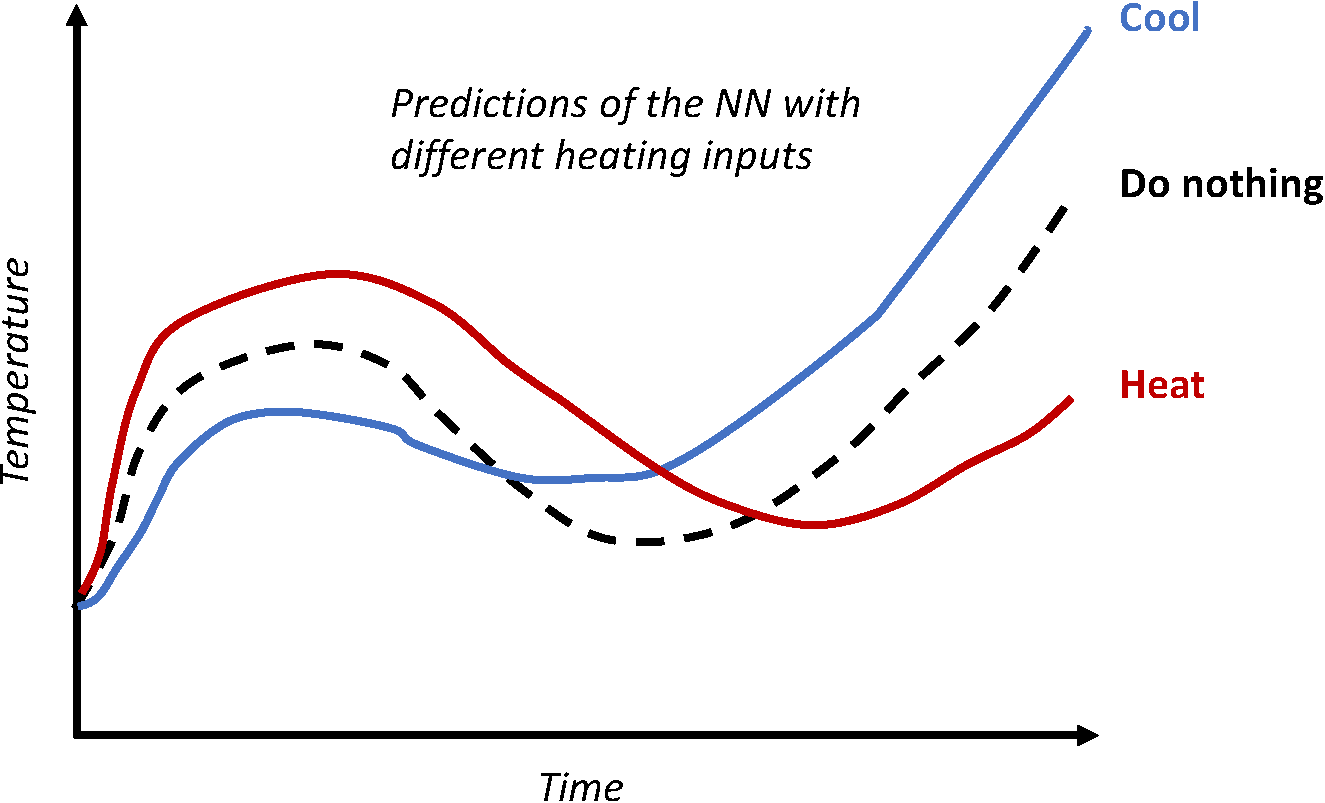

Our recent analyses, for example, pointed to the problem of shortcut learning when modeling the temperature inside a building. Some classical black-box NNs were indeed capable of predicting the temperature very well – but without taking the power input into account! In other words, for these NNs, heating or cooling a room has absolutely no impact on its temperature. They observed some correlations in the data, but did not understand causality – for instance, the chain of events that started with cold weather outside leading to heating systems being activated, which drove temperature changes inside the building, passed them by.

We even found out that some of the trained NNs were sometimes inclined to completely reverse the laws of thermodynamics, thinking that heating a building could ultimately lead to lower temperatures than cooling it (Figure 2)! That’s quite funny, but not very practical – unless we just created an artificial intelligence capable of rewriting the physical laws of our world.

Of course, this means that such a flawed NN is useless for controlling building temperatures, for example. Since it doesn’t understand what heating achieves, it might never instruct the building to run its heating throughout the winter, no matter how deep the snow outside.

And, unless you retrain it to teach it the right behaviours, the NN has no way of understanding that it did anything wrong! The worst part is that it is not conscious of its flaws, and won’t give any feedback on what it’s doing. And if an engineer never realises that something is wrong with their model, that could lead to the ethical or physical dangers we considered above.

Physically consistent neural networks

On the other hand, the laws of thermodynamics have been known for decades, which allows us to design models grounded in the underlying physics – and which are therefore correct by design. The downside, of course, is that they are much less accurate than NNs, which can capture highly complex phenomena through their many connections. Wouldn’t it be great if we could get the best out of both worlds?

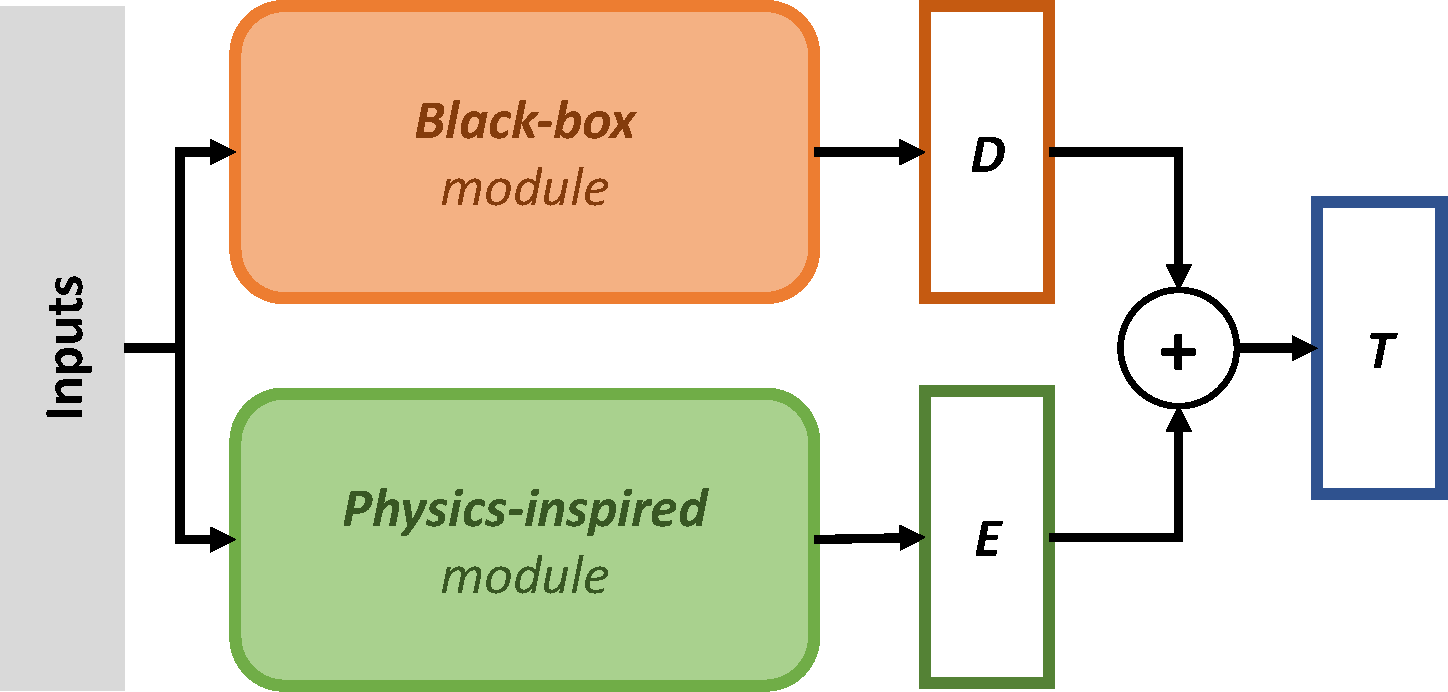

This is exactly what physically consistent neural networks (PCNNs) do, as you can check in our latest papers. The key idea is to capture the known physical phenomenon in a so-called physics-inspired module, which runs in parallel to the pure black-box NN architecture. This allows us to ensure that what we know about the underlying physics is respected by design, while still leveraging the complex structure of NNs to capture complex phenomena that the physics-inspired module cannot grasp.

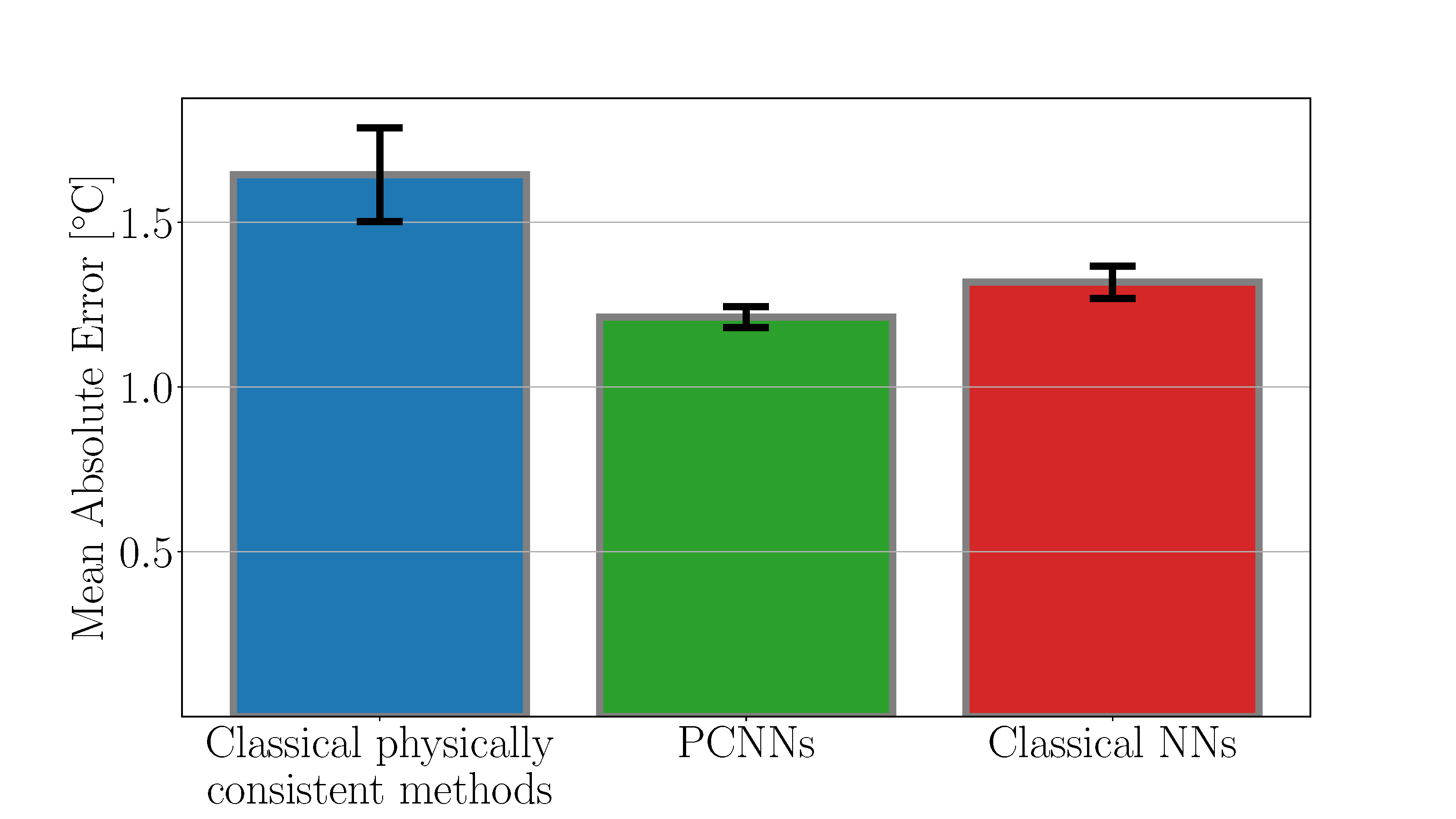

In the case of building temperature modeling, the physics-inspired module makes sure that heating leads to higher temperatures, cooling to lower ones, and that low outside temperatures indeed cannot heat your building. And the performance is great – even better than pure NNs. We find that forcing the model to follow the underlying physics is helpful (Figure 5, adapted from here)! This PCNN is aware of the impact of heating and cooling, and – if it is used to control your building – can be trusted not to let you freeze on your couch all winter.

Beyond buildings

We used building models as a practical case to investigate the performance of PCNNs, but of course, they can be applied to any task where (parts of) the underlying physics are known. A little knowledge of the system is indeed enough to design the physics-inspired module – and you then let the black-box module, running in parallel, capture potentially very complex unknown and/or unmodelled phenomena.

And the best part is: you don’t need to do anything else. Once the physics-inspired module has been created, you can seamlessly learn both the parameters of the NN and the physics-inspired module together in one go! If you are interested, don’t hesitate to check how PCNNs are implemented in practice on GitHub.

Do you want to find out more about this line of research? Check out the other techniques we used to ensure neural networks aren’t doing stupid things here and here, and on my website.