Safety first: It’s all under control

Every day, we rely on countless complex systems without thinking about how they work. From tiny actions like flipping a light switch, to bigger decisions like placing orders with online retailers or even taking a flight, we usually consider what we need, but not what might go wrong. We simply expect that planes we board will not crash, that the lights will work, and that items marked for next-day delivery will indeed arrive the next day. Yet if these events don’t happen, the results could span from a mild inconvenience (late package) to an outright disaster (plane crash).

These expectations can be described as forming our concept of safety. If the expectations are met, we are safe; if they are violated, then the system has become unsafe. Safety, in this sense, is a measure of comfort and confidence in the ability of a device or system to do what we want it to; it may cover psychological or environmental safety, not just physical. Let’s dig into how engineers define (and ensure) safety, and how we balance different kinds of constraints. In a second blog post we will go into more depth on the technicalities of safety control.

What is safety?

For systems designers and operators, safety needs to be considered at all levels from concept to implementation and beyond, including fault detection and remedy (and emergency responses). It’s a managerial problem, not just an engineering one, but control engineers are crucial to creating safety systems at every step.

There are many aspects to consider. In the context of flight travel, the plane should land at its destination, arrive on time, unload all passengers and baggage, stay an acceptable distance from other objects while in the air (the ground, towers and fellow planes), retain structural integrity, maintain an acceptable fuel level, keep passengers comfortable throughout (with respect to temperature, pressure, and oxygen levels), have its software execute in a reliable and predictable manner, and be in good working condition for its next flight.

Some of these requirements are clearly more important than others. If the temperature inside the plane falls a little too low, the passengers may be uncomfortable but will not be harmed; the same cannot be said for a full pressure breach or plane crash. Similarly, arriving at the destination is the goal, but in an emergency situation (such as a damaged engine), the plane should land safely as soon as possible, anywhere it can, to allow for repairs.

So there’s a hierarchy of safety specifications, especially in the case of unavoidable failures. If the plane is certain to crash, then it should do so in a way that minimises casualties and damages – such as by crashing over water rather than into a densely populated city. Depending on the application, priorities may be set by many different stakeholders: standards bodies, (inter-)governmental agencies, companies, researchers, engineers, and users (including you).

And on top of that, safety specifications need to be balanced with stability: the tendency of the system to return to a point, pattern, or region. Safety and stability are complementary principles, and will affect each other. For an aeroplane, for instance, stability could involve returning to a nominal flight path after a disturbance, while safety would include not crashing or stalling as it does so.

What’s a safe space?

In judging whether a system is safe, we start with defining safe regions – although, as we will see, that means more than just geography.

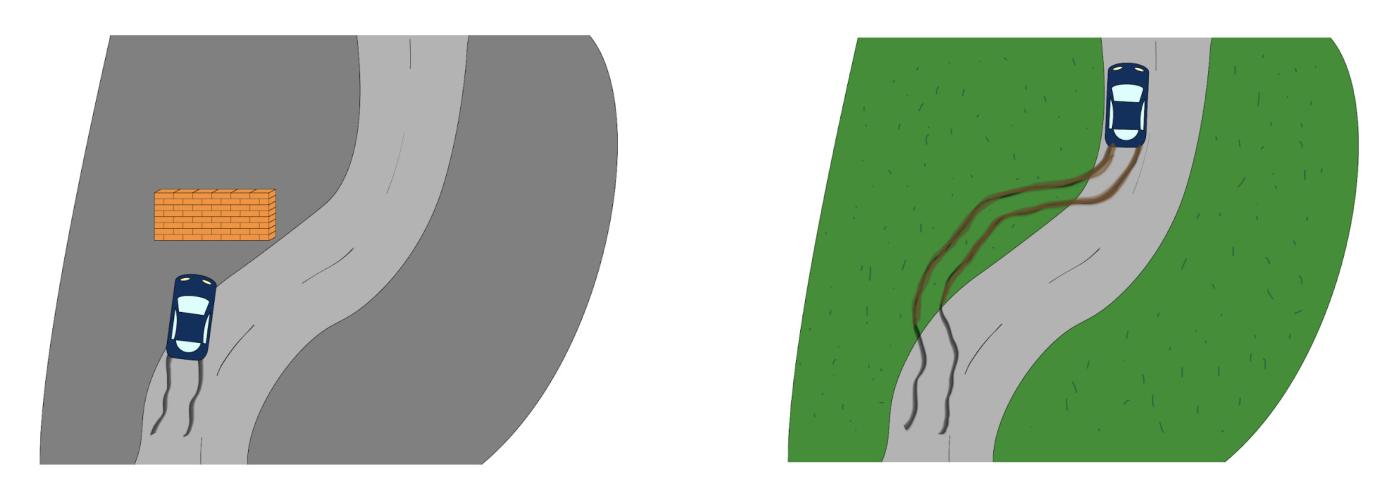

Let’s take a new example: consider road traffic. For a car driving along a road, the safe region (in the sense of position) is that part of the road that is not occupied by other road users (including pedestrians and cyclists, as well as vehicles) while the car observes the traffic laws (right-of-ways, traffic signals, shoulders and so on). Everything else is the unsafe region. That includes situations where the car would overlap position with other cars (whoops – that’s a collision), or perhaps with a building (crash!). But clearly, for safety, position alone does not tell the full story. We also need to consider velocities and other states.

Imagine a car on a collision course with a wall. When considering only the position, you could say the passengers in the car are in a safe region – after all, they have not yet crashed into the building. But if the car is moving fast enough that aggressive braking would not prevent a collision, then it is already unsafe. So in designing control systems for autonomous vehicles, the unsafe region must be defined to include the initial car position and velocity, as well as the effectiveness of its brakes. What’s more, safety specifications often depend on time and ordering of tasks. An electric car must be sufficiently charged, unplugged from the charging station, and then driven: keeping the car plugged in while starting to drive will generally cause the cable or charging apparatus to break.

In other words: it’s not enough to tell the car “do not crash”. Control engineering needs to commute this high-level specification (avoid the unsafe region!) into actionable tasks with properly ordered priorities. Human drivers operate under an unspoken but universally understood set of instructions: “Go to your destination at a safe speed, maintaining safe levels of fuel or charge, while obeying all laws of the road and avoiding other road users, even when they are not themselves obeying traffic laws.” They probably also follow a secondary, less frequently needed understanding: “If you can’t stay safe, choose to inflict the least damage on yourself, your passengers and other road users. Choose first to avoid the most vulnerable road users.” Engineers need to somehow translate all that into code… and defining the more fuzzier parts of that guidance, such as vulnerability, may be beyond the scope of engineering alone.

The core of the car’s programming can be expressed as a reach-avoid task: (ALWAYS NOT [in unsafe set]) and (EVENTUALLY [in destination set]). Reach-avoid tasks have applications in path planning: for instance, a warehouse robot should arrive and drop off its parcel into the loading zone while avoiding crashes with shelves and other agents. Vehicle navigation is just a subset of this kind of path planning.

Then, the time and task dependence involved can be expressed in the form of temporal logic, which is composed of the standard logical operations (NOT, AND, OR), and timed operations (ALWAYS, UNTIL, EVENTUALLY). Temporal logic can be used to build up specifications for systems, including those comprising multiple agents such as a swarm of drones in a light show. But, as you may imagine, it becomes increasingly difficult to verify and obey temporal logic specifications as the size and complexity of the systems grows. And the other factors involved in safety – from speed to emergency responses – demand increasingly complex logic.

There are formal methods that can be used to check whether the safety specifications are met. In reachability analysis, for example, all possible accessible states are explored (or approximated) to ensure that the unsafe set is never reached. Software controllers in cars and planes (among other applications) are rigorously checked to ensure that in every possible scenario, the program will be executed according to the logical specifications. The control laws also include instructions on how to balance safety specifications in unavoidable situations, since not all violations of safety specifications are equal. (It is worth noting that of course, a certificate of safety for the software alone does not guarantee safety for the entire system. A certifiably correct control algorithm for an aeroplane may still cause the plane to crash if some screws or fasteners are missing.)

It’s bad, but is it fatal?

Let’s go back to the aeroplane example and consider two possible events: crashing into a mountain, or arriving half an hour late to the destination. Both are violations of the safety specifications, but they are hardly comparable in severity.

Safety violations can be divided into two basic categories: fatal and non-fatal. “Fatality” here refers to termination of the system, not necessarily human life – it immediately stops the system from evolving. Fatal safety violations should be avoided at all costs, while non-fatal violations are acceptable but not preferred. For the plane, the crash is a fatal safety violation, while late arrival is non-fatal.

Other examples of fatal safety violations include a pedestrian being run over by a car, or a power grid blackout. They come with severe penalties (whether loss of human health, heavy financial damages, or other consequences) should be avoided at all costs.

Non-fatal safety violations could be costly, but do not on their own stop the system from continuing to operate. Control policy can allow for constraint violations, such as allowing air temperature to go beyond the defined comfortable bounds.

Complicating matters, current constraints in the power grid give rise to coupled fatal and non-fatal safety violations. A turbine motor (e.g. a gas generator) could output 5-10 times its safely rated current in short bursts while remaining safe; but sustaining this overcurrent for longer could cause the motor to break, or a power line to sag and possibly melt. These components have fatal safety specifications in terms of heat and physical stress. These constraints can be abstracted as non-fatal safety violations in terms of current, incentivising a controller to return to the safe region and discharge heat/reduce stress.

As the transition to renewable energy sources progresses, more fatal safety violations come into play. Solar power is typically generated in DC, and must be converted to AC by a power electronics device called an inverter. Inverters have a fatal safety constraint with respect to their current limit: overcurrent could melt the circuit components. Engineers working in power systems and power electronics are working on methods to keep the grid safe and stable under the rigid current limits required by inverters.

How safe are we?

A safe outcome may not in itself be enough. If two planes flying in opposite directions pass without crashing, does it matter whether they miss each other by 30km or 10cm? Passengers and staff on board would feel very differently about those two situations!

This is why we talk about safety quantification. Possible quantifiers could include the distance of closest approach, the minimum disturbance that could cause a crash, the worst-case probability of crashing, the minimum data corruption that could cause a data-consistent model of the system to crash, or the mean/quantile/risk of this distance (or other quantity) under uncertainty.

Quantifiers for specific applications could include the maximum speed of an aircraft, the height of a drone, the concentration of a toxic substance in the water, or the number of infected people during a pandemic. In any context, sometimes we may want to lower the maximum value, rather than just ensuring the maximum is not exceeded. In other words – it’s not enough to just miss the other plane in the sky. You want a big enough safety margin.

All this should have given you a good understanding of what safety means in control, and how engineers need to formulate the logic to provide vital constraints for an automated system. If you’re interested in how different types of controllers implement these constraints – and in particular, how they cope with uncertainty – we’ll go into that in a second, more technical blog post.