‘Everyone should have a broader perspective’: Eva Tardos on scientific cross-pollination

When Dr Eva Tardos, distinguished professor of Computer Science at Cornell University, visited ETH to deliver this year’s John von Neumann lecture, we enjoyed a lengthy conversation about her career and enduring interests. One thread running through everything was how her work has often touched on social sciences – an interest that goes right back to high school.

Eva’s parents were an economist and a psychologist, and through them, she started reading about politics and labour organisation. However, her natural aptitude steered her instead toward maths, and from there, computer science; moving geographically from (then Communist) Hungary, to Germany, and California. But Eva says any culture shock came more from the academic shift than leaving the Eastern bloc.

“I did my PhD in mathematics. The Postdoc at the University of Bonn was very mathsy,” she tells me. “And the first computer science position I had was the Berkeley Postdoc. So I was more interested in watching the difference between these two communities. Within the maths community, there was some suspicion of papers in these computer science publications because they weren’t written in proper maths style. At the beginning of these papers, they talk about the application, which is not mathematically precise. Only at some later point in the paper would they formally define the actual mathematical model they’re talking about.

“Mathematical papers always start with the model, and maybe somewhere they mention that this is something to do with technology. As a maths person, before, I was looking at [the tech-first style] with suspicion. Now, I think they’re right. If we’re not modelling real things, why do we care?”

Computer science issues can get very real indeed. Eva’s most cited article is on "Maximizing the Spread of Influence through a Social Network" – and it appeared in 2003, a year before Facebook was launched (and three years before Twitter). Although she insists there was nothing prescient about this (within the computing community, online networks were already well established), it certainly foreshadowed what has become an ever hotter topic in the two decades since then. But the big problems now are the flip side of what Eva, and her co-authors David Kempe and Jon Kleinberg, addressed 20 years ago.

“The paper is about only half of the story. What is the right algorithm to spread an idea, to convince people of something? Vote for Biden, vote for Trump. But with the spread of online networks, there are many other important phenomena. One new thing is – often attributed to Russians, but they’re probably not the only ones doing it – they want polarisation. They want some people to be very strongly for abortion and other people to be very strongly against abortion, and they should please fight with each other. So the goal is different. It’s some of the same underlying phenomena that spreads these goals, but it’s different questions.”

Whether considering how to convince the biggest possible audience of your ideas, or how to get them fighting, in both cases the opposite question is: how do you prevent this? In the case of polarisation, how can you combat misinformation?

Eva suggests “inoculating” social media users against fake news, drawing an analogy with Covid vaccination campaigns. “They had to declare priorities. If there isn’t enough vaccine for everyone, there is a trade-off. Do you vaccinate the ones that are most vulnerable? Or the ones that spread it the most, like medical professionals and store workers?

“Targeting those who spread it the most is the network intervention. We try to protect the network. So in the case of polarisation of opinion, to protect someone against fake news, you flood them with facts. You want them to be very knowledgeable, so that they can spot fake news. That's the optimal vaccination, that’s the intervention.

“Again, it’s great if you can protect everyone and make sure that everyone is inoculated. But if you have a limited budget, then to have the greatest impact you need to target your messages. Who are the important people for those topics?”

Eva acknowledges that it’s not a foolproof solution. “This is making a pretty simple assumption, that if I send you something that counteracts the fake news, that lowers the probability that you get infected by the fake news or spread it. I'm modelling psychology in a very, very simple way. But I think it is reasonable as a first approximation.”

The game of fair allocation

Another networking topic that drew Eva’s interest early on, and that has kept her attention over the decades, is traffic routing – and game theory.

“Around 1999 at Berkeley, Christos Papadimitriou was trying to convince our community that we should think about the Internet and networks as an economic system. Every router is selfishly optimising, or if you prefer, simplistically optimising…. Well, if you want to know how simple, myopically optimising systems interact with each other, that's something that economics has been doing for centuries. So I saw a connection. I had been working on traffic routing (among many other things) from early on, and then I saw this interesting economics aspect.”

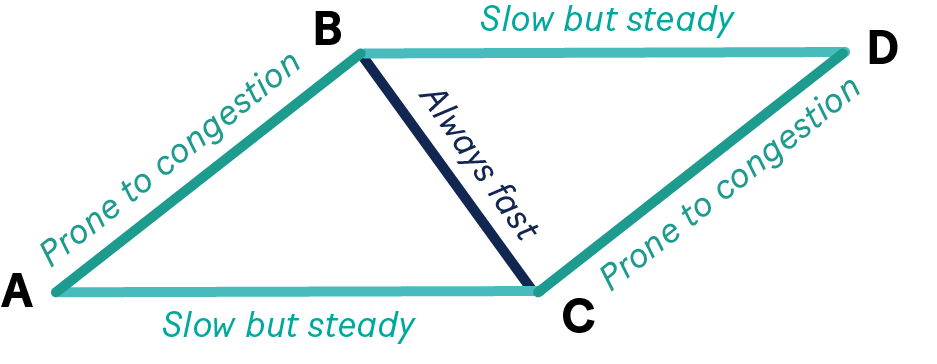

A specific topic that sparked her interest was Braess’ paradox: the counter-intuitive discovery that in a congested traffic network, closing certain roads may actually shorten journey times. When everyone individually chooses to take the “best” road, that quickly becomes the worst road. Conversely, closing off that too-tempting option results in shorter travel times for everyone. (This has even been empirically demonstrated in New York.)

With roads as an analogy for internet traffic, does that mean we should be employing traffic direction strategies to deal with bandwidth congestion? Laying higher-bandwidth cables may be easier than building new highways, but it is still expensive. Levying charges for bandwidth usage is impractical, since it is too expensive to collect the fees. So, since the 1990s, computer scientists have been considering alternative ways to improve network speed, such as reserving certain routes for companies that have paid for higher-speed connections between their offices.

“This is not so easy to implement, as the routers would need to know which packets have priority. But it is an option that was considered in the earlier days of the Internet,” Eva explains. “So the question we considered is: what is a better solution to traffic delays, adding a bit more bandwidth and allowing routers to myopically choose the shortest path? Or directing traffic?”

In fact, it turns out, Braess’ paradox is not the key to success here. “Given the amount of traffic people want to send, if you're choosing between taxing them, or organising how they send their data, versus giving them a bit more bandwidth, the better option is giving them a bit more bandwidth.”

The importance of cross-pollination

While economics has been an important perspective for her work, it goes both ways.

“Something I'm very proud of is that my work has had some impact on convincing economists to care about some things that computer science people always knew to care about,” she says. “When CS people evaluate a system, we don't ask, ‘Is it perfectly efficient, yes or no?’ Instead we ask, ‘What's the efficiency loss?’ And we certainly accept that, whether because of simplicity, or what information is available, or whatever, you're not going to make the best decision. But as long as the efficiency loss is not too bad, it might be worth it. There’s a trade-off.”

In contrast, economists tend to look for a more absolute improvement. “If there's any efficiency loss, then they should change the system. But nowadays, many of them are talking about efficiency loss. Like, ‘This system is not so bad, the efficiency loss is only a few percent.’ I'm not making a decision, whether it's okay or not. I’m just working out what the loss is, and I leave it to some policy maker. ‘Here's the information you need for making decisions. You can make the system more complicated, but the efficiency gain is only 10%. Is it worth it? Your call.’”

Staying within a narrow research field means losing out on valuable insights. Eva says: “I think everyone should have a broader perspective. As a human, as a researcher, as a teacher.

And the same applies if you're a researcher in industry; you should care about the impact of your technology in a broader sense.”

At Cornell, she says, students often come specifically because of its reputation as, not just a strong tech school, but a full-fledged university with a strong liberal arts programme. With this culture well established, they are working actively to embed a broader consciousness in computing students, whether through inviting guest speakers from other disciplines (such as a recent labour economist, who lectured on AI’s impact on employment) or through a wide menu of ethics courses. “The course that I most like, in this space, explicitly stays away from the word ethics,” she smiles.

“It's called ‘Choice and Consequences in Computing.’ We don’t want to tell you what’s ethical and what’s not ethical, but just that you need to think about it. You make choices in what you create. And there are consequences.”