Sounding Smart Isn’t Enough: How We Can Make Machine Learning Safer

Since the launch of ChatGPT just three years ago, artificial intelligence has dominated conversations and investment to an unprecedented extent. The model shattered all previous records for human-machine interaction, becoming the fastest platform to reach one million users. Current trends indicate that AI is rapidly being integrated into nearly every device we use, from smartphones to washing machines. But increasingly, the question is being raised: is it safe to rely so heavily on this technology, given its known shortcomings?

We know that AI models are prone to “hallucinations”: statements that sound correct but are actually false. The troubling part is that AI models are often extremely confident about these wrong answers, or, on the other hand, so gullible that they agree with whatever the user says, just to keep them happy. If you are a regular user of AI models, you might have seen this pattern yourself. First the chatbot gives a convincing sounding answer, then agrees that its first statement was wrong… a correction that perhaps comes rather too late.

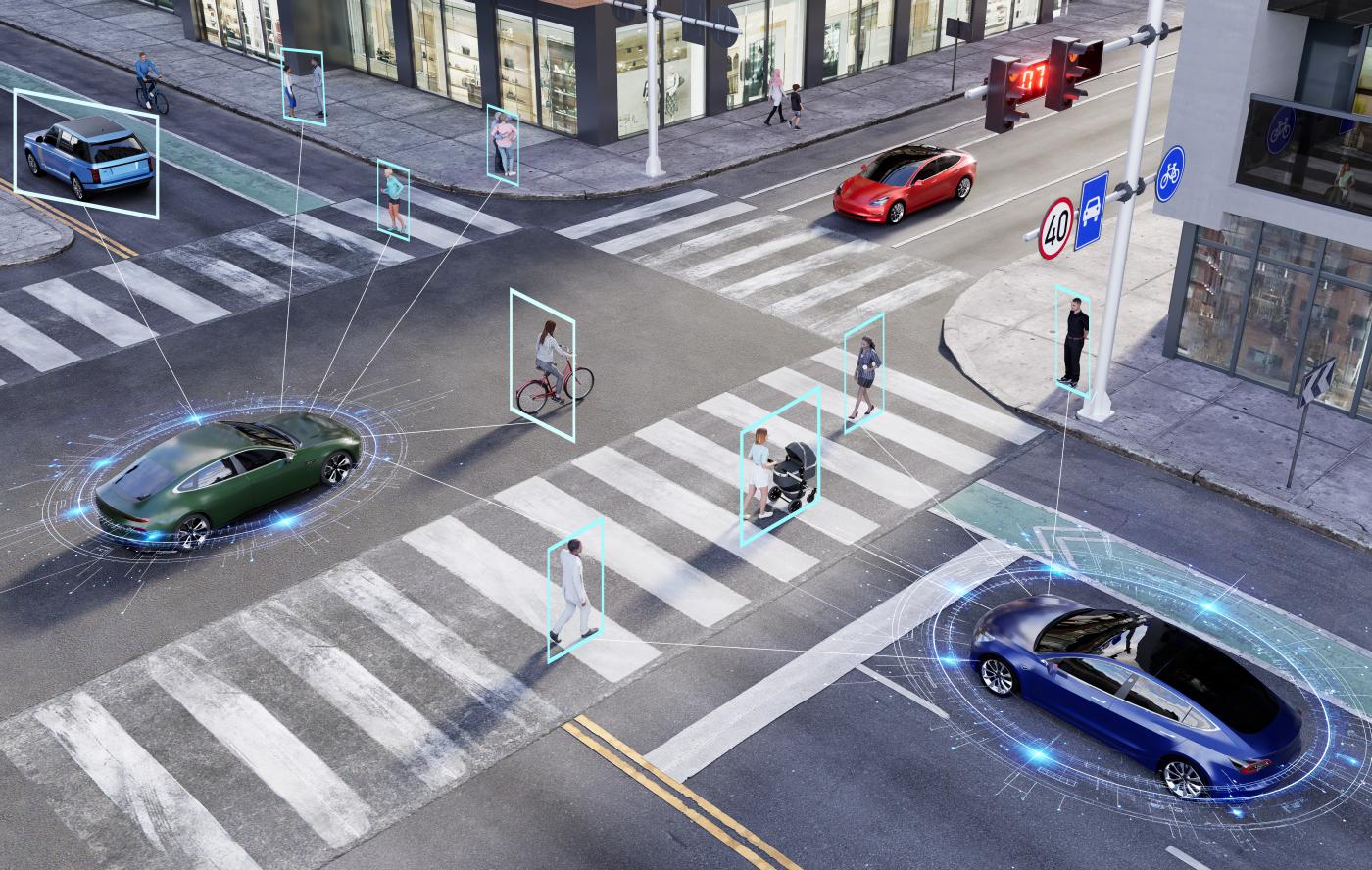

The same reliability concerns extend to vision-based AI systems, which "see" and interpret images – whether distinguishing between a cat and a dog, recognizing a car’s license plate at an intersection, or, in the future, enabling household robots or autonomous vehicles to transport children to school. While these applications rely on visual perception much like humans do, AI systems are far more fragile.

Machine learning models, the foundation of AI, can be surprisingly vulnerable to even minor alterations in their input. This vulnerability was first observed in classical machine learning: introducing a tiny amount of carefully crafted noise to an image can cause the model to misclassify it, often with high confidence. For example, an AI tool might correctly identify a panda in one image, but after adding subtle, handcrafted noise, it mislabels the same image as a gibbon. Similarly, small patches on a stop sign can trick an AI into perceiving it as a speed limit sign.

This phenomenon is known as adversarial robustness. While it may seem like a minor flaw, the implications are serious. Consider an autonomous car misinterpreting a stop sign as a speed limit sign – such an error could lead to catastrophic accidents. This fragility renders today’s AI models unreliable for safety-critical applications.

Dependable learning

During my PhD, I studied machine learning through the lens of systems and control theory. This is the same field of mathematics and engineering that keeps airplanes steady on autopilot, maintains the temperature of our rooms, drives car assembly lines, and ensures satellites keep orbiting on their correct paths.

Its success comes from decades of rigorous mathematical development. The primary motivation for our research stems from the recently discovered parallels between machine learning and the well-established field of systems theory. This connection has unlocked new opportunities to analyze these black-box systems using a wealth of tools developed over decades. So, with the guidance of my advisor, Professor Giancarlo Ferrari Trecate, I decided to bring machine learning and control theory together. The result was what we call dependable machine learning.

By “dependable”, we mean that a machine learning model should behave as expected, even in scenarios it has never seen before, backed by solid mathematical guarantees. This opens the door to making AI interpretable, bounding its behavior in uncharted situations, and ultimately making it safe to use in critical applications.

Of course, “dependability” can mean different things in different contexts. For example, ensuring an airplane remains stable despite wind gusts, building machine learning models of a chemical plant that still obey the laws of chemistry, or designing a chatbot that doesn’t hallucinate. Researchers have tried to make AI more dependable by using conventional methods such as constraining the parameters of models or restricting their outputs. While these approaches can help, they often add heavy computational burdens or fail to guarantee dependability rigorously.

The traditional way to get a neural network to adhere to set constraints (such as the laws of thermodynamics, stability, and so on) is to promote the desired behaviour through the loss function: that is, building in a penalty (or reward). The network will then try to optimize its output according to that function. However, penalization of this so-called reward function does not guarantee that one will get the same reward in future.

To address this, we explored the concept of free parametrization. In simple terms, this means designing models so that no matter how you tune the parameters, the neural network will automatically respect the desired properties. The user can set the parameters freely to ensure that the goal will be satisfied by design. In consequence, the network needs to perform far less computation; this model is therefore not only more reliable, but also far more scalable and energy efficient.

During my PhD, we applied these ideas in several frameworks. One example is making AI models more robust in image classification tasks. Image classification (teaching a model to label pictures) is central to many real-world applications: from vision in robots and autonomous cars to handwriting recognition and digitizing old books.

But dependable AI goes far beyond image classification. Consider something as everyday as predicting the temperature in your apartment. This might sound simple, but many factors influence temperature: the angle of the sun, furniture placement, how rooms share walls, the outside weather, and even the season. Writing down an exact model for all of this is not just tedious but requires deep expertise. While we know the laws of thermodynamics, we don’t have a straightforward way to combine them with AI models.

That’s where our work comes in. We embedded physical laws directly into the structure of machine learning models, so that they always obey the physics, no matter the parameters. We called these physics-consistent models. Our experiments showed that such models could reliably predict apartment temperatures up to three days in advance, enabling better control of heating and cooling. This means saving energy, and ultimately, helping the planet. This experiment was conducted in a fruitful collaboration with Professor Colin Jones and Loris di Natale.

Dependable learning offers a path toward trustworthy AI that can be safely deployed in our daily lives, even in safety-critical applications. By merging machine learning with control theory, we can harness the strengths of both: the expressiveness and generalization ability of AI, combined with the rigorous guarantees of control theory. We believe this framework lays the foundation for interpretable and safe AI.